[Update 2: Phil Pressel just released his book Meeting the Challenge: The Hexagon KH-9 Reconnaissance Satellite (get it at AIAA or Amazon). I've ordered it and will post a review after I get it.]

From 1957 to 1965, a high tech startup called Itek made the world's most sophisticated satellite reconnaissance cameras for a single customer -- the CIA. The company has a fascinating subsequent history, as they ended up building cameras for Apollo and Viking. Eventually they ended up building the DB-110 reconnaissance pod, which I'll do a blog post on some day.

Merton Davies at RAND apparently originated the idea of using a spinning camera mounted on a spin-stabilized satellite to take panoramic shots with a narrow-angle lens. Amrom Katz passed the concept to Walter Levinson at Boston University Physical Research Laboratory (BUPRL). Levinson refined the idea to that of using an oscillating lens, for use in the HYAC-1 panoramic camera in Air Force high altitude reconnaissance balloons. Itek, just a few weeks after incorporation in late 1957, bought BUPRL, which was developing the HYAC-1 panoramic camera to take pictures from high altitude balloons.

Soon after, the CIA contacted Itek to discuss the camera requirements for the first spy satellites. All of these initial satellites came to use the rotating panoramic camera. I think this is the KH-4A or KH-4B Corona.

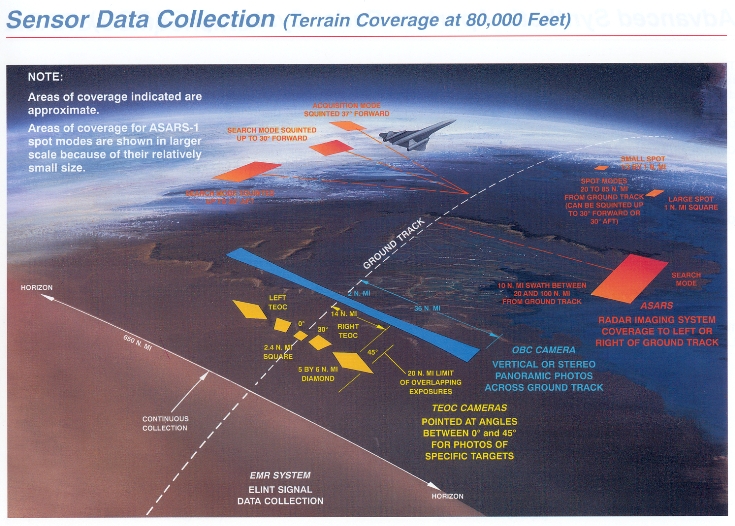

Itek also built versions of these cameras for use in the U-2 and SR-71 aircraft, in which they were called the OBC (Optical Bar Camera, named for the appearance of the field of regard on the ground). These were first used in the 1960s and are still in use today. Here is an Itek Optical Bar Camera that goes in the nose of an U-2:

Take a look at the big, wide blue bar under the airplane. That's what a single frame of the camera shot. It's huge. I've heard that this "bar" looking frame was why they called it the optical bar camera. However, the NRO's Hexagon documentation refers to the rotating optical assembly (what in many cameras is called the "Optical Tube Assembly") as the optical bar.

After a string of successful programs, requirements racheted up and tensions grew between NRO and CIA over the next program, Fulcrum. Early on, Itek was contracting with both the NRO and the CIA on competing projects. Itek pulled out of the CIA's project, and some combination of the NRO and the CIA took all their (government-owned) work and gave the job to Perkin-Elmer. When the dust settled the project was renamed Hexagon. Perkin-Elmer built the KH-9 Optical Bar Camera with their own design rather than Itek's, as they didn't think the Itek design would work. Here is a look into the aperture of the KH-9 Optical Bar Camera.

The Itek OBCs in the U-2, SR-71, and Apollo spacecraft all had a rotating structure called the roller cage, which I suspect was fixed to the rotating camera. The Perkin-Elmer design in the KH-9 deleted the roller cage and the rotating fold mirror inside it, and instead had a servo controlled twisting platen.

Here is a comparison of various optical bar cameras built up to 1971 (the launch of the first KH-9).

| KA-80 (U-2/SR-71/Apollo) | (U-2/SR-71) | (KH-9) | ||

|---|---|---|---|---|

| Focal length | 610 mm (24 inches) | 760 mm (30 inches) | 1524 mm (60 inches) | |

| Aperture | 174 mm (f/3.5) | 218 mm (f/3.5?) | 508 mm (f/3.0) | |

| Cross-track Field of View | 108 degrees | 140 degrees | 120 degrees | |

| Film width | 127 mm (5 inches) | 127 mm (5 inches) | 168 mm (6.6 inches) | |

| Film length | 2005 m (6500 feet) | 3200 m (10500 feet) | 70,000 m (230,000 feet) | |

| Format | 114 x 1073 mm | 114 x 1857 mm | 155 x 3190 mm | |

| Film resolution | 3.7 micron | 3.7 micron | 1000:1 contrast: 3.7 micron 1.6:1 contrast: 7.4 microns | |

| Depth of focus | +/- 13 microns | +/- 13 microns | +/- 11 microns | |

| Format resolution | 31k x 290k = 9 Gpix | 31k x 502k = 15 Gpix | 42k x 862k = 36 Gpix | |

| Frames | 1650 | 1640 | 21,000 | |

| Nominal Altitude | 24.4 km (80k feet) | 24.4 km (80k feet) | 152 km (82 nm) | |

| Center ground resolution | 14.8 cm | 11.9 cm | 37 cm | |

| Swath | 67 km | 134 km | 555 km | |

| In-track field of view, center | 4.55 km | 3.66 km | 15 km | |

| Nominal overlap | 55% | 55% | 10% (each camera) | |

| Area collected | 226k km2 | 362k km2 | 2x 80m km2 | |

| Nominal ground velocity | 1000 m/s | 1000 m/s | 7,800 m/s | |

| Cycle time | 2 sec | 1.65 sec | 1.73 sec | |

| Film velocity at slit | 1.9 m/s | 2.9 m/s | 5.5 m/s | |

| Maximum slit size | 7.62 mm | 12? mm | 22? mm | |

| Max exposure time | 4 ms | 4? ms | 4? ms |

Take a look at the area collected by the KH-9. The Soviet Union was a big place: 20m km2. Each of the four film re-entry capsules could return the entire USSR, in stereo, with plenty of side overlap and margin for images fouled by clouds. Typically they chose to take more frames with smaller swaths (90 degrees or 60 degrees) to get higher average resolution, which brought down the total take somewhat to around 60 million km2.

My resolution numbers up there are slightly inflated. The film used could only eke out 130 lp/mm when given the maximum possible amount of contrast, such as would be seen at a shadow line. For finding something like paint on a road they were about half that. Pixellated sensors have a much more drastic cliff, of course. So the e.g. KH-9 resolution above might be compared to anything like a 20 to 30 gigapixel camera today. I'll note that I don't have any of those to suggest.

There are two big concepts here that I think are important. The first is the mechanical and logistical difficulties of using film. Below I've spelled out some of the details. The second is that despite these headaches, until very recently, film has been superior in many ways to electronic sensors for massive survey work.

The basic problems with using film stem from the fact that the sensor surface is a thin, pliable, moving, relatively inexpensive object that has to be held within the camera with very high precision. There must have been several problems associated with getting the film aligned within +/- 11 microns of the lens's focal plane. Among other things, the machines resemble Van de Graf generators, and so the film is subject to static electricity buildup and discharges, along with heating that tends to make it even more pliable and sticky. To reduce the static buildup, many of these cameras slid the film over surfaces with hundreds of pores lifting the film with pressurized air.

I think the Itek designs spun the entire optical assembly at a constant rate. The entire spinning optical assembly is inside a gimbal which rocks back and forth 1.6 degrees in each cycle. The rocking motion accomplishes forward motion compensation, so that the sweep of the slit across the ground is orthogonal to the direction of flight. This compensation ensures that the motion of the image on the film moves almost exactly with the film, and there is no blurring in the direction of flight during longer exposures. This rocking motion must have required fairly large torques, and I'm sure this is one of the things that the Perkin-Elmer folks balked at when considering the Itek design in space. Note that a constantly rotating camera sweeps at the outer edges faster than at the center, so the forward motion compensation probably had to vary it's rate of rocking as it went to compensate.

Here is a diagram which shows how the film was wrapped around the roller cage in the Itek designs. As the optical assembly (including the roller cage) twists counterclockwise, the film is transported clockwise.

However, even with a constant spin rate, the film does not transport at a constant rate. For instance, in the SR-71 OBC, a frame is exposed for 640 ms, during which time the film rips around the roller cage at 2.9 meters per second (that's what the rollers see). For the next second, the film advances at just 1 meter per second, so that the frame boundary going across the top of the roller cage can meet up with the slit going around the bottom. Because of the speed change, many of the freewheeling rollers on the roller cage will contact unexposed film coming from the framing roller with a tangential speed difference of 1.9 meters per second. As each freewheeling roller changes speed to match the film, it seems to me it would tend to scuff the film. I'm sure they made sure to make those rollers as lightweight as possible to reduce their rotational momentum.

Note the unlabeled slit after the second mirror right next to the film wrapped around the roller cage. Only the portion of the film after this in the optical chain has light on it, so this is the only spot that must be held accurately. I don't really know how it was done, since every moving belt of material that I've even seen has vibrated. They may have had a glass reseau plate that the film slid across. Sliding film across glass at 2.9 meters per second seems like an excellent way to scratch one or both. I have no evidence for it yet, but this seems like a good application for the compressed air film handling scheme.

The Itek forward motion compensation gimbal also took out airplane pitch motions. Airplane roll motions were taken out by varying the optical tube roll rate. That's easy enough (it doesn't require torque), but the film rate supplied to the roller cage assembly in the back also had to change to match.

That last diagram gives a pretty good clue to another challenge in this design -- film curvature. Although I've not found any labelled dimensions, it looks like the roller cage in the Itek designs was about 300 mm in diameter. The design of the roller cage really had me puzzled for a while, because the film transport path is cylindrical, but the film being exposed by the slit has to be flat. I finally figured it out when I took a good look at this photo of the Apollo OBC (also made by Itek):

The roller cage is a cylindrical cage of ROLLERS! Duh! As the film passes between the rollers, it's locally flat, and that's how they keep the film surface matched to the lens focal plane. Here's a 5x blowup of the roller cage in the picture above. It looks like the rollers are packed together as close as they can get in order to minimize the polygonal variation in film distance from the center of roller cage rotation. I think this variation leads to a (small) variation in film speed at the exposure site.

There should be a spot in the roller cage where there aren't any rollers, and the film becomes planar for a while. This spot would be over the exposure slit. In the Apollo OBC pictured here, the gap between the rollers must be at least 7.6mm, and given the orientation of the lens at the top it should be on the back side of the roller cage that we don't see here.

The film is pulled taut around the stuttering, bucking and twisting roller cage with an assembly that looks like the following. During the exposure film is pulled around the roller cage at 5.7 meters/second. Here's the film path:

If the tension on the film is too small, small irregularities in it's "set" curvature will make it lift off as it goes around the roller cage, and small patches of the film will lift away from the cage. With a +/- 11 micron depth of focus, it doesn't take much lift off to make a problem. If the tension is too high, the film will wrinkle longitudinally.

The Perkin-Elmer design did not have the roller cage or the gimbal. Instead, they had a twisting platen assembly at the focal plane. This would twist back and forth through 130 degrees as the optical bar rotated through 360 degrees. The two were nearly locked together through the 120 degrees of rotation that were used for exposure.

Because the Perkin-Elmer design has no rocker gimbal doing forward motion compensation, and the optical assemblies rotate at constant speed, the sweep is faster at the edges than in the center, and the area swept in each frame is slightly S shaped. They may have splayed the roll axes of the optical bars to get first order forward motion compensation, but this doesn't change the S shape. To keep the image from smearing across the film, the KH-11 has to keep the slit perpendicular to the motion of the slit across the ground, accounting for the changing sweep rate versus spacecraft velocity, as well as the rotation of the Earth, which is 6% of the spacecraft velocity at the equator.

This is why the twisting platen in the KH-11 is servo locked to the twisting optical assembly. They have to vary the relative twist of the two a little bit to keep the projected slit perpendicular to it's projected motion.

After the picture was shot the film sat around for a month in a temperature and humidity controlled environment, and then was dropped, recovered, and developed. There was a lot of opportunity for the film to shrink differentially. All the mechanical twisting, as well as film shrinkage, must have made photogrammetry a nightmare.

The Sunny 16 rule says that you can properly expose ISO 100 film in bright daylight conditions with a f/16 lens with a 10 ms exposure. The KH-9 used mostly monochrome Kodak 1414, which has an Aerial Film Speed of 15, which I think is equivalent to ISO 38. In full sun a 1 ms exposure at f/3 would have worked fine. On the Apollo OBC that corresponds to a 1.9mm wide slit. They did have exposure control hardware, and it looks like they could exposure for two stops dimmer than full sun. They might have also stopped down from that, in order to avoid blowouts over ice.

At this point, I'm sure those of you who have worked with digital sensors are feeling pretty smug. But consider how wonderful film was for capturing and returning large amounts of imagery.

Each of the four re-entry vehicles on the KH-9 would bring back 5,000 36 gigapixel images. If somehow compressed to 1 bit per pixel, that would be about 20 terabytes. These days that's about 10 hard disks, and would take about three months to downlink at a constant 25 megabits per second. Since they were returning these re-entry vehicles every few months, a modern downlink is only barely adequate. It has only been in the last 10 years or so that disk drive capacities have become high enough to fit the data into the payload of one of those re-entry vehicles -- 30 years after the KH-9 was originally deployed.

In 1971, the area of film actively integrating photons in the KH-9 was 155 mm x 15 mm. The largest, fastest TDI CCD sensors commercially available in 2013 are 64 mm x 1.3 mm. The pixels on these are 5.2 microns rather than 3.7 as on film. The smaller integrating length (1.3 mm versus 22 mm) gives a maximum exposure time of 240 microseconds, which is smaller than the 2 milliseconds we would prefer. 155 mm x 10 mm CCDs with 3.7 micron pixels are not commercially available, but could probably be custom made.

Another issue would be the readout rate. A fast TDI in 2013 reads out lines at 90 KHz. The KH-9 was exposing film in the roller cage at 5.5 meters/second, which corresponds to a line readout rate of 1.5 MHz. This could be achieved with a custom built TDI sensor maybe 5 years ago. It would require 1300 ADCs running at 45 MHz, which would burn a lot of power. This might be possible with interesting packaging.

The capture rate of the KH-9 was so enormous it completely overwhelmed the ability of the photointerpreters at the CIA to examine the photographs. It's only recently that computers have gotten large enough to store and process imagery at this volume, and it's not at all clear that anyone has yet developed algorithms to find the "unknown unknowns" in a bunch of raw imagery. I think they call this "uncued image search".

To my knowledge the U.S. never again fielded a spysat with the ability to survey major portions of the earth at high resolution. Later Keyhole satellites appear to have concentrated more on taking valuable single shots at higher resolution (7 cm GSD), and on having the orbital maneuverability to get those shots. I think the intelligence folks lost interest in survey satellites when it became clear that they couldn't take advantage of the comprehensive coverage which was their primary feature. It's kind of ironic that the very problem that Itek was founded to solve (managing the huge volume of survey photography) ended up being a major reason why satellites with survey capacity made possible by Itek's cameras faded away. It's fascinating for me to see what has become of this problem.

Brian McClendon is on record as saying that Google has 5 million miles and 20 petabytes of Street View imagery. That's the processed imagery, not the raw take. The KH-9 raw take that overwhelmed the CIA photointerpreter capacity 30 years ago was less than 1% of what Google Street View shot. Unlike the KH-9 imagery, most of which I suspect has never been looked at, every one of the Street View panoramas has been seen by a real user. And Google is hardly the only organization using Big Data like this. The consumption problem that the CIA and NRO never solved has been utterly crushed by organizations with entirely different goals. (Granted, uncued search remains unsolved.)

Now that Big Data has caught up with the photo throughput of digital satellites, it's fun to think about what could be built with modern technologies. But that's probably best saved for another post.